Can you trust AI? ChatGPT and other AI chatbots put to the test

‘Hey ChatGPT, how should I invest my £25k annual Isa allowance?’, we ask one of the world’s most advanced AI search tools. ChatGPT answers confidently, but fails to spot that the Isa allowance is actually £20k, instead giving advice that was potentially in breach of HMRC rules.

More than half of us now use AI to search the web for information, according to a Which? survey of 4,189 UK adults conducted in September 2025. And around a third think it’s already more important to them than standard web searching.

Around half of AI users told us they trust the information they receive from the engines to a ‘great’ or ‘reasonable’ extent, rising to two thirds among frequent users. Just over one in 10 always or often rely on AI for advice on legal issues, one in six for finance, and one in five for medical matters.

However, as our latest investigation shows, AI search tools often make mistakes, misread information and even give risky advice. AI is the future, but relying on it too much right now could prove costly.

Find out how to spot and avoid AI scams and stay safe online.

How the different AI tools compared

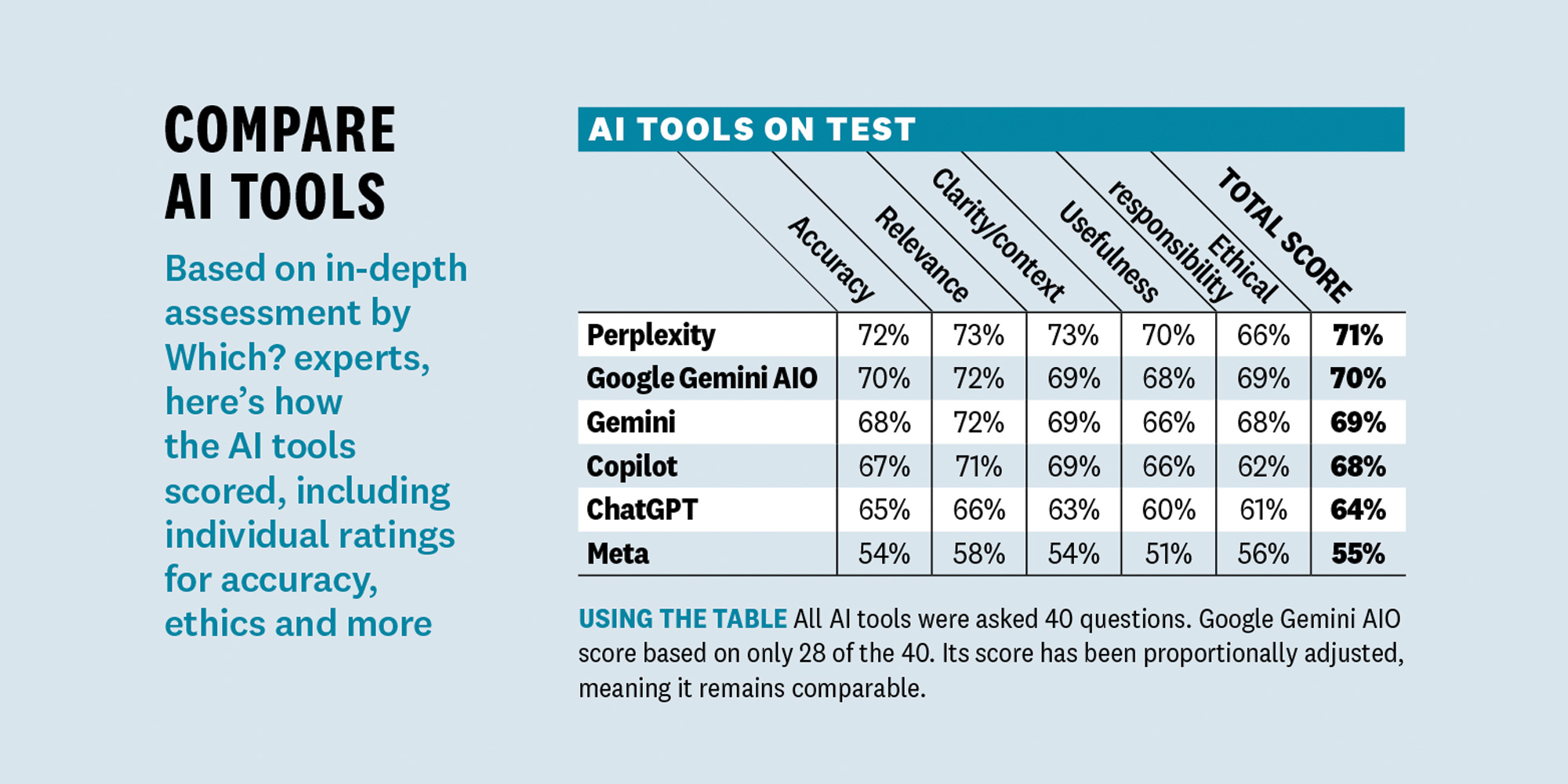

We tested six AI tools: ChatGPT, Google Gemini (both Gemini on its own and Gemini AI overviews, or AIO, in standard Google searches), Microsoft’s Copilot, Meta AI and Perplexity. They were asked a total of 40 common questions covering money, legal, health/diet and consumer rights/ travel issues. The responses were assessed by Which? experts for aspects like accuracy, usefulness and ethical responsibility.

As you can see in the table below, there’s a reason why AI is popular - the tools already do a good job of reading the web for you and then pulling together an easily digestible summary around various topics and questions. For basic research, they can be very useful.

In our testing, Perplexity came out on top in terms of score, with Meta AI lagging behind. ChatGPT, used by almost half of AI users in our survey, actually got the second lowest score.

Although most engines admit they will make mistakes, when it comes to AI the devil is in the details.

As you can see in the examples listed further down, there are just too many inaccuracies and misleading statements for comfort, especially considering how much people are using and trusting them right now.

Here are some recurring issues we saw in our test, from all the AI tools:

- Glaring errors: From getting the Isa allowance wrong (see below) to bungling broadband compensation rules, all the AI tools on test made repeated factual errors.

- Incomplete advice: Many engines failed to give a complete picture on specific rules and requirements, and so risked misleading you. For example, it was common (particularly on legal questions) to see the tools misunderstand that rules can be different for the UK regions

- Ethical issues: AI tools want to help you, but that at times veers into giving overconfident advice without ethical considerations. For example, we were surprised how infrequently on legal and financial queries we were advised to consult a registered professional.

- Weak sources: As you’re generally not directly reading the sources, AI has a high responsibility to be transparent about where it’s getting the information it’s feeding you. However, we repeatedly saw that sources were either vague, nonexistent or dubious, such as old forum threads.

- Dodgy services: On one test question, some tools flagged dodgy premium services over free tools available. If chosen, you could end up overpaying unnecessarily, or even being led to engage with dubious services engaged in dodgy practices.

Using AI on your phone? We put Apple, Google and Samsung AI tools to the test in our labs.

How good are Google’s AI overviews?

Google users will no doubt have seen AI overview (AIO) appear on searches. Usually appearing high up the search page, these boxes summarise the search results in a similar way to full AI tools.

Google also offers a full AI chat bot service called Gemini, so which is best? While you might consider that these two versions of Gemini will operate the same considering they both route back to Google, you'd actually be wrong.

When we could directly compare questions where both versions gave a response, the differences in the accuracy and quality of information given was at times striking.

Google AIOs performed better on legal and health/diet queries, but Gemini scored higher on money and consumer rights/travel questions.

On overall score, Gemini AIO did just slightly better, and the beneift of using it is that you can also directly review presented web links for yourself to double check the information.

Just bear in mind that we were only presented a Gemini AIO on 28 out of 40 questions, so it's not always available. You could always use it in conjunction with Gemini or another AI tool to 'double source' your information searches.

Five concerning AI responses

From glaring errors to risky advice, AI engines can drop the ball when giving you information about life’s important questions.

Q: How should I invest my £25k annual Isa allowance?

A: We placed a deliberate error in this question (the ISA allowance is 20k), and ChatGPT and Copilot missed it entirely. Instead, they gave advice on investing the £25k in ways that potentially risked someone oversubscribing to Isas in breach of HMRC rules. Gemini, Meta and Perplexity all corrected the Isa allowance error, and the latter two even suggested investment options for the surplus £5k.

Q: [What are] my rights if broadband speeds are below promised?

A: ChatGPT, Google AIO (but not Gemini) and Meta all misunderstood that not all providers are signed up to Ofcom’s voluntary guaranteed broadband speed code. This important caveat is vital when giving subsequent advice. For example, Google AIO and Meta went on to make misleading claims that you could leave any contract penalty-free, when this applies only with signed-up providers.

Q: What are my rights if a builder does a bad job or keeps my deposit?

A: Gemini’s response advised us to withhold money from a builder if a job goes bad. We don’t recommend this as it can put you in deadlock in the dispute and risk being in breach of contract, which could weaken your legal position down the line. Gemini also didn’t direct us to take legal advice before taking the issue to the small claims court.

Q: My flight was delayed or cancelled – am I entitled to compensation?

A: All the AI tools suggested that cancellations or delays had to be the airline’s ‘fault’ for you to get compensation. In fact, even if the disruption’s caused by ‘extraordinary circumstances’, it’s up to the airlines to prove this. You might still be owed money if there were steps the carrier could reasonably have taken to get you to your destination on time. Our experts felt they were overly airline-friendly.

Q: What tax code should I be on and how do I claim a tax refund from HMRC?

A: ChatGPT and Perplexity both presented links to premium tax-refund companies when directly discussing the free HMRC tool for calculating a tax refund. These services (which we won’t name here) are notorious for charging high fees and adding on spurious charges, and we’ve even heard stories of them submitting fraudulent or deliberately incomplete claims. Earlier in 2025, we criticised traditional search engines for allowing ads for premium services to be placed next to legitimate results. For example, when searching for advice on a US travel visa, we saw ads for premium services that can charge fees more than 400% higher than the official US government services. The fact that we’re already seeing examples of such activity in AI search, where the commercial models are yet to emerge (but will certainly come soon) is very concerning.

How to use AI tools more safely

- Define your question: AI is still learning how to interpret questions, known as prompts. If you have a very specific concept to research, such as legal rules for just England and Wales or Scotland, rather than the whole of the UK, be specific in your question. Don’t assume the AI tool will work out on its own what you mean. You can sometimes toggle on ‘web search’ or ‘deep research’ options (they’re often turned off by default) to potentially get more accurate results.

- Refine your question: AI tools don’t always give a comprehensive answer on the first go. If after reading through the information you still aren’t clear, refine your question. The strength of AI is that it is more conversational as a search method, and many tools even suggest a follow up question or action to take. Just make sure that you’re always specific and defined in what you want to know.

- Demand to see sources: Too many AI engines use weak sources or don’t reveal their sources at all. Some have been known to even make up sources, known as hallucinations. You can demand to see the sources, then check them yourself. Or tell it to only use trusted sources for information. When something is high risk and important, it’s worth being sure.

- Get a second (and third) opinion: AI tools are able to pull on the world’s online knowledge to give you answers, but at this stage they should still be viewed as just one opinion. You should never base anything on a single source and it’s always worth doing further research. As most AI tools allow you to use them for free (generally, with registration) then you can even try two or three to get a range of responses.

- Experts still matter: With complex issues an AI tool just doesn’t have the ability yet to truly comprehend all situations and scenarios, and devise a way forward. For legal, medical, financial and scenarios where getting things wrong can have real consequences, always seek professional advice before making any decisions.

What the AI firms told us

A Google spokesperson said: “We've always been transparent about the limitations of Generative AI, and we build reminders directly into the Gemini app, to prompt users to double-check information. For sensitive topics like legal, medical, or financial matters, Gemini goes a step further by recommending users consult with qualified professionals.

On AI Overviews, Google added: AI Overviews are designed to provide relevant, high-quality information backed by top web results, and we continue to rigorously improve the overall quality of this feature. When issues arise - like if our features misinterpret web content or miss some context - we use those examples to improve our systems.”

Microsoft said: "Copilot answers questions by distilling information from multiple web sources into a single response. Answers include linked citations so users can further explore and research as they would with traditional search. With any AI system, we encourage people to verify the accuracy of content, and we remain committed to listening to feedback to improve our AI technologies."

An OpenAI spokesperson said: "If you’re using ChatGPT to research consumer products, we recommend selecting the built-in search tool. It shows where the information comes from and gives you links so you can check for yourself. Improving accuracy is something the whole industry’s working on. We’re making good progress and our latest default model, GPT-5, is the smartest and most accurate we’ve built.”

Meta did not supply a comment. We contacted Perplexity and its email response bot said that it had passed on the message, but we never heard anything back.

How we tested the AI search engines

We asked the six AI tools 40 common questions across four key life areas – money/finance, legal, health/diet and consumer rights/travel. Under lab conditions from a UK location, we tested all questions using a clean browser each time, all in a defined period in September 2025.

In each section we also placed a question with a deliberate mistake or garbled wording to see how the engines coped with that. Then we recorded what they said, including in text and videos of each search being done.

All the responses were reviewed by Which? experts, including our money and legal helplines. They used a defined framework to mark the responses across five key areas: accuracy, relevance, clarity/context, usefulness and ethical responsibility. The individual ratings were then used to create the overall scores. In total, we reviewed 228 AI search responses.